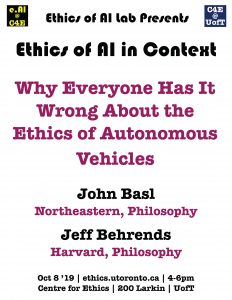

Why Everyone Has It Wrong About the Ethics of Autonomous Vehicles

Many of those thinking about the ethics of autonomous vehicles believe there are important lessons to be learned by attending to so-called Trolley Cases, while a growing opposition is dismissive of their supposed significance. The optimists about the value of these cases think that because AVs might find themselves in circumstances that are similar to Trolley Cases, we can draw on them to ensure ethical driving behavior. The pessimists are convinced that these cases have nothing to teach us, either because they believe that the AV and trolley cases are in fact very dissimilar, or because they are distrustful of the use of thought experiments in ethics generally.

Something has been lost in the moral discourse between the optimists and the pessimists. We too think that we should be pessimistic about the ways optimists have leveraged Trolley Cases to draw conclusions about how to program autonomous vehicles, but the typical defenses of pessimism fail to recognize how the tools of moral philosophy can and should be fruitfully applied to AV design. In this talk we first explain what’s wrong with typical arguments for dismissing the value of trolley cases and then argue that moral philosophers have erred by overlooking the significance of machine learning techniques in AV applications, highlighting how best to proceed.

☛ please register here

John Basl

Northeastern University

Philosophy

Jeff Behrends

Harvard University

Philosophy

Tue, Oct 8, 2019

04:00 PM - 06:00 PM

Centre for Ethics, University of Toronto

200 Larkin