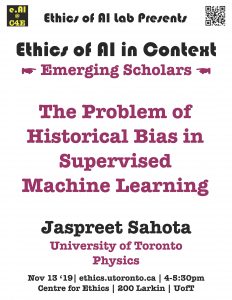

The Problem of Historical Bias in Supervised Machine Learning

Machine learning algorithms are becoming ubiquitous in business and government. Algorithms are routinely deployed that make decisions about wehow live: e.g. credit adjudication, parole approval, resume screening, insurance costs, etc. Training supervised algorithms on the basis of historical data has the risk of perpetuating historical biases in contemporary society. This can lead to a pernicious feedback cycle that should be avoided by eliminating bias from training data and furthering research into deep learning models.

☛ please register here

Jaspreet Sahota

Independent Researcher

Ph.D. Physics, University of Toronto

Wed, Nov 13, 2019

04:00 PM - 05:30 PM

Centre for Ethics, University of Toronto

200 Larkin