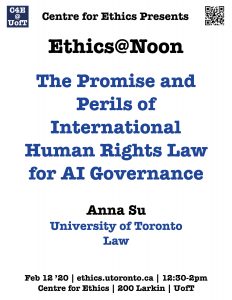

The Promise and Perils of International Human Rights Law for AI Governance

The increasing use and deployment of artificial intelligence (AI) poses many challenges for human rights. This paper is largely a mapping exercise and explores the advantages and disadvantages of using international human rights law to regulate AI applications. Particularly, it examines existing strategies by international bodies, national governments, corporations and non-profit partnerships on how to govern and consequently ensure the development of AI is consistent with the protection of human rights. Not all of these strategies refer to or include references to human rights law or principles. In fact, most of them are self-adopted ethical guidelines or self-regulating norms based on a variety of sources to mitigate the risks and challenges of, as well as identifying the opportunities brought about by AI-powered systems. In recent years, academic and policy literature from a variety of disciplines has emphasized the importance of a human rights-based approach to AI governance. That means identifying risks to recognized human rights, obliging governments to incorporate their human rights obligations in their respective national policies, and even applying international human rights law itself. This was encapsulated in the Toronto Declaration, issued last May 2018 by a group of academics and civil liberties groups, which called on states and companies to meet their existing responsibilities to safeguard human rights. But save for few exceptions, it remains a question what and how that approach concretely looks like, and why it is beneficial to do so in the first place.

☛ please register here

Anna Su

University of Toronto

Law

Wed, Feb 12, 2020

12:30 PM - 02:00 PM

Centre for Ethics, University of Toronto

200 Larkin