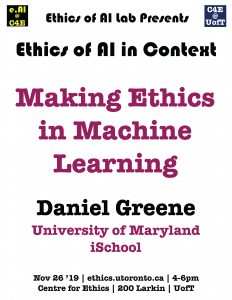

Making Ethics in Machine Learning

Machine learning systems are implemented by all the big tech companies in everything from ad auctions to photo-tagging, and are supplementing or replacing human decision making in a host of more mundane, but possibly more consequential, areas like loans, bail, policing, and hiring. And we’ve already seen plenty of dangerous failures; from risk assessment tools systematically rating black arrestees as riskier than white ones, to hiring algorithms that learned to reject women. There’s a broad consensus across industry, academe, government, and civil society that there is a problem here, one that presents a deep challenge to core democratic values, but there is much debate over what kind of problem it is and how it might be solved. Taking a sociological approach to the current boom in ethical AI and machine learning initiatives that promise to save us from the machines, this talk explores how this problem becomes a problem, for whom, and with what solutions. Comparing today’s high-profile ethics manifestos with earlier moments in the history of technology allows us to see a nascent consensus around an approach we term ‘ethical design.’ At the same time, the recent surge in labor activism inside tech companies and anti-racist organizing outside them suggests how this expert-driven vision for more humane systems might be replaced or augmented with something more revolutionary. This talk draws on research conducted with Anna Lauren Hoffmann (UW), Luke Stark (MSR Montreal), and designer Geneviève Patterson.

☛ please register here

Daniel Greene

University of Maryland

iSchool

Tue, Nov 26, 2019

04:00 PM - 06:00 PM

Centre for Ethics, University of Toronto

200 Larkin