Information systems are becoming increasingly reliant on statistical inference and learning to render all sorts of decisions, including the issuing of bank loans, the targeting of advertising, and the provision of health care. This growing use of automated decision-making has sparked heated debate among philosophers, policy-makers, and lawyers, with critics voicing concerns with bias and discrimination. Bias against some specific groups may be ameliorated by attempting to make the automated decision-maker blind to some attributes, but this is difficult, as many attributes may be correlated with the particular one. The basic aim then is to make fair decisions, i.e., ones that are not unduly biased for or against specific subgroups in the population. I will discuss various computational formulations and approaches to this problem.

Information systems are becoming increasingly reliant on statistical inference and learning to render all sorts of decisions, including the issuing of bank loans, the targeting of advertising, and the provision of health care. This growing use of automated decision-making has sparked heated debate among philosophers, policy-makers, and lawyers, with critics voicing concerns with bias and discrimination. Bias against some specific groups may be ameliorated by attempting to make the automated decision-maker blind to some attributes, but this is difficult, as many attributes may be correlated with the particular one. The basic aim then is to make fair decisions, i.e., ones that are not unduly biased for or against specific subgroups in the population. I will discuss various computational formulations and approaches to this problem.

[☛ eVideo]

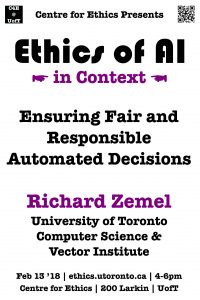

Richard Zemel

Computer Science & Vector Institute

University of Toronto

Tue, Feb 13, 2018

04:00 PM - 06:00 PM

Centre for Ethics, University of Toronto

Rm 200, Larkin Building