As “big data”-based predictive algorithms and generative models become commonplace tools of advertising, design, user research, and even political polling, are these modes of constructing machine-readable models of individuals displacing humans from our world? Are we allowing the messy, unpredictable, illegible aspects of being human to be overwritten by demands we remain legible to AI and machine learning systems intended to predict our actions, model our behavior, and sell us something? In this talk, technology scholar Molly Sauter looks at how currently deployed modeling systems constitute an attack on personhood and self determination, particularly in their use in politics and elections. Sauter posits that the use of “big data” in politics strips its targets of subjectivity, turning individuals into ready-to-read “data objects,” and making it easier for those in positions of power to justify aggressive manipulation and invasive inference. They further suggest that when big data methodology is used in the public sphere, it is reasonable for these “data objects” to, in turn, use tactics like obfuscation, up to the point of actively sabotaging the efficacy of the methodology in general, to resist attempts to be read, known, and manipulated.

[☛ eVideo]

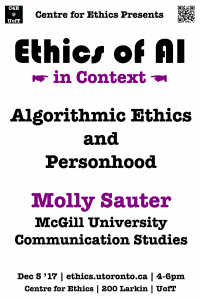

Molly Sauter

Communication Studies

McGill University

Tue, Dec 5, 2017

04:00 PM - 06:00 PM

Centre for Ethics, University of Toronto

Rm 200, Larkin Building