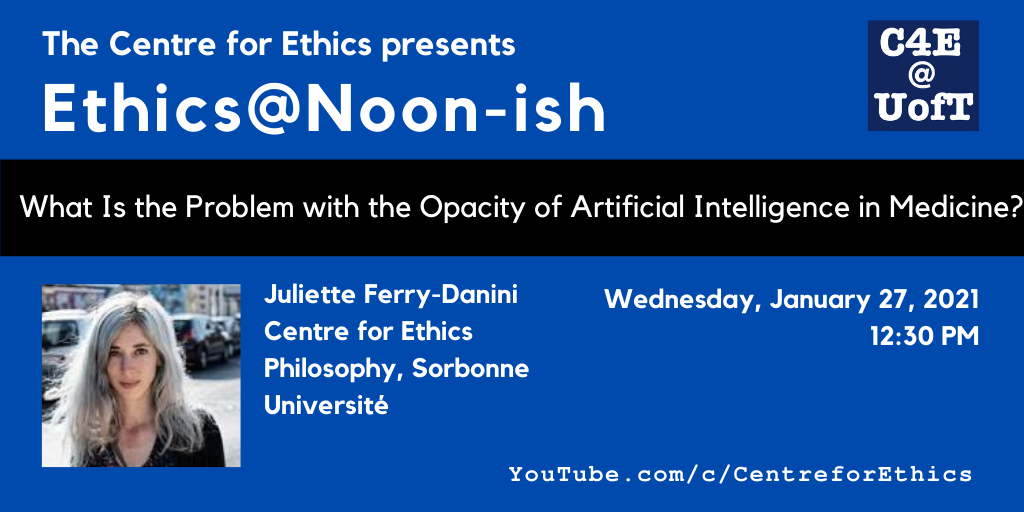

What Is the Problem with the Opacity of Artificial Intelligence in Medicine?

Artificial intelligence has been met with great enthusiasm by the scientific community. However, philosophers and especially ethicists have voiced some concerns. The concepts of “opacity” and “transparency” of algorithms have been coined with the presupposition that opacity in AI is something to avoid and conversely transparency is a goal to achieve in the field. Numerous guidelines have been published on the ethics of AI, resulting in several reviews (Jobin, Ienca, and Vayena 2019; Rothenberger, Fabian, and Arunov 2019; Hagendorff 2020). In these guidelines, transparency is routinely described as one of the key ethical principles the field of AI should follow. The concept, however, is not straightforward. It could first be defined in an epistemic way: an algorithm is transparent if and only if we understand how it works and we can explain it. Here transparency could be synonymous with “explainability.” In the case of medicine and decision-making algorithms, the main worry concerns how health professionals may be able to justify a diagnostic without being able to explain how they came to it and why (Goodman 2016). However, it could be argued that such an epistemic opacity is already constitutive of evidence-based medicine, where mechanisms are often not known and explanations of efficiency never certain (London 2019). Yet, there is at least a second meaning attached to the concepts of “transparency” or “opacity” which goes beyond the issue of explainability. In the ethics of AI’s literature, notably, the issues at stake have also been framed as how we came to the knowledge we now claim to have and more specifically, how the data have been selected to build a specific algorithm.

The aim of this talk will thus be twofold: first, to map the different meanings of the concept of “transparency” and its mirror concept “opacity” both in the ethics of AI, on the one hand, and in the philosophy of medicine and bioethics, on the other hand. Second, my goal will be to pave the way to understand in which sense – ethical and/or epistemological – opacity should be avoided both in medicine and in AI (and a fortiori in AI in medicine). What is the problem with the opacity of artificial intelligence in medicine?

► please register here

This is an online event. It will be live streamed on the Centre for Ethics YouTube Channel on Wednesday, January 27. Channel subscribers will receive a notification at the start of the live stream. (For other events in the series, and to subscribe, visit YouTube.com/c/CentreforEthics.)

Juliette Ferry-Danini

Centre for Ethics Postdoctoral Fellow in Ethics of Artificial Intelligence

Philosophy, Sorbonne Université

Wed, Jan 27, 2021

12:30 PM - 01:45 PM

Centre for Ethics, University of Toronto

200 Larkin