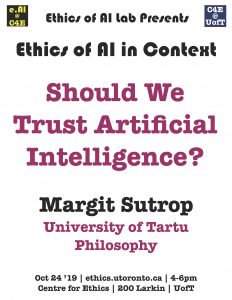

Should We Trust Artificial Intelligence?

Trust is believed to be a foundational cornerstone for artificial intelligence (AI). Recently the European Commission High Level Expert Group on AI adopted the Ethics Guidelines for Trustworthy AI (2019), stressing that human beings will only be able to confidently and fully reap the benefits of AI if they can trust the technology.

Although building trust in AI seems to be a shared aim, there is no overall agreement on what trust is, and what it depends on. In this talk, I will approach trust in AI from a philosophical perspective. On the basis of a conceptual analysis of trust I shall investigate under which conditions trust in AI is rational.

Philosophical accounts of trust differ on whether they argue that trust involves a belief that the trustee is trustworthy, an affective attitude or both. There is a consensus that trust involves the acceptance of risk and it is not compatible with excessive precautions. Several philosophers have pointed out that reasons for trust are preemptive reasons, i.e. reasons against taking precautions and against weighing available evidence of somebody’s trustworthiness.

We know that besides bringing substantive benefits to individuals and society, AI can also bring along serious risks. We should therefore ask if it is rational to be against taking precautions.

I am going to ask if instead of talking about building trust in AI and aiming at trustworthy AI, we should not focus on accountability of AI and trustworthiness of institutions designing and governing AI. Also, I shall point out that the metaphorical talk of trustworthy AI and ethically aligned AI ignores the real disagreements we have about ethical values.

☛ please register here

Margit Sutrop

University of Tartu

Philosophy

Thu, Oct 24, 2019

04:00 PM - 06:00 PM

Centre for Ethics, University of Toronto

200 Larkin