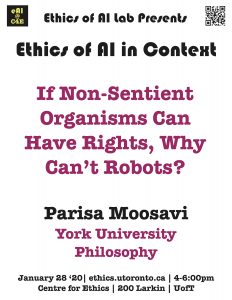

If Non-Sentient Organisms Can Have Rights, Why Can’t Robots?

The fact that artificially intelligent machines are becoming increasingly capable of emulating human intelligent behavior has led some authors to speculate that at some point we would have to grant moral rights to these machines. Some such arguments are indirect and appeal to claims about how our treatment of robots affects us. But when it comes to direct argument about the moral status of machines, the discussion has mostly focused on the possibility that robots would one day develop sentience or mental capabilities like consciousness and self-awareness.

However, the idea that the capacity for sentience is a necessary condition for moral considerability has been contested. Some environmental ethicists argue that non-sentient biological organisms, species, and ecosystems can potentially have a moral status, because they have a good of their own. This raises the question whether non-sentient robots can similarly enjoy a moral status.

In this paper, I first give an account of what makes non-sentient organisms potentially morally considerable, and then explain why this moral considerability does not extend to non-sentient robots. I argue that the same considerations that keep us from thinking that the simplest artifacts like a toaster or a bicycle fall short of having a good of their own also apply in the case of more complex, artificially intelligent machines. Thus, I argue that unlike biological entities, non-sentient intelligent machines have no greater claim to moral rights than the simplest artifacts.

☛ please register here

Parisa Moosavi

York University

Philosophy

Tue, Jan 28, 2020

04:00 PM - 06:00 PM

Centre for Ethics, University of Toronto

200 Larkin