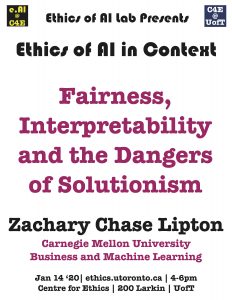

Fairness, Interpretability and the Dangers of Solutionism

Supervised learning algorithms are increasingly operationalized in real-world decision-making systems. Unfortunately, the nature and desiderata of real-world tasks rarely fit neatly into the supervised learning contract. Real data deviates from the training distribution, training targets are often weak surrogates for real-world desiderata, error is seldom the right utility function, and while the framework ignores interventions, predictions typically drive decisions. While the deep questions concerning the ethics of AI necessarily address the processes that generate our data and the impacts that automated decisions will have, neither ML tools nor proposed ML-based mitigation strategies tackle these problems head on. This talk explores the consequences and limitations of employing ML-based technology in the real world, the limitations of recent solutions (so-called fair and interpretable algorithms) for mitigating societal harms, and contemplates the meta-question: when should (today’s) ML systems be off the table altogether?

☛ please register here

Tue, Jan 14, 2020

04:00 PM - 06:00 PM

Centre for Ethics, University of Toronto

200 Larkin